[ad_1]

This text is reserved for our members

Once we take a look at the following 5 to 10 years, when synthetic intelligence (AI) and sensors might be built-in into actually all the things, we’re taking a look at an period the place you go house to your lounge and also you flip in your TV, and whereas the TV is watching you, it is speaking to the fridge, it is speaking to the toaster, it is speaking to your bed room, it is speaking to your lavatory, it is speaking to your automobile, it is speaking to your mailbox. So that you’re sitting in your most non-public area and also you’re always being watched and there is a entire silent dialog happening round you.

The aim of that dialog, in an financial context the place you’re being optimised as a shopper, is what these gadgets ought to present you to make you a greater buyer. So you find yourself being stripped of your primary company as a result of selections are being made for you with out your involvement.

This results in a extremely elementary query about being human in digital areas. In nature, issues have an effect on us, however the climate or animals don’t attempt to hurt us. However when you’re strolling in a metropolis the place the town itself is considering learn how to optimise you, you grow to be a participant in an area that’s making an attempt to dictate your selections. How are you going to train your free alternative when the town round you, the buildings round you, the gadgets round you – actually all the things – is making an attempt to pre-select what you see with a view to affect that alternative? When you’re on this area that is watching you, how do you get out of it? How do you train your autonomy as an individual? You possibly can’t. We actually want to start out desirous about what we’re constructing with AI, what we’re placing ourselves into, as a result of as soon as we have created that area, there is no approach out of it.

That is why we have to regulate AI. And we have to do it shortly. If we take a look at the way in which we regulate different sectors, the very first thing we do is articulate a set of harms that we need to keep away from. If it is an aeroplane, we do not need it to crash; if it is medication, we do not need them to poison folks. There are a selection of harms the place we already know AI is contributing, and we need to keep away from them sooner or later: for instance, disinformation, deception, racism and discrimination: we do not need an AI that contributes to decision-making with a racial bias. We do not need AI that cheats us. So step one is to determine what we do not need the AI to do. The second is to create a course of for testing in order that the issues we do not need to occur do not occur.

Lots of the issues that society will expertise with AI are virtually analogous to local weather change. It’s a systemic downside and systemic issues require systemic approaches

We take a look at for potential flaws in aeroplanes in order that they do not fall over. Equally, if we don’t need an algorithm that amplifies false data, we have to discover a approach to take a look at for that. How is it designed? What sort of inputs does it use to make selections? How does the algorithm behave in a take a look at instance? Going by means of a strategy of testing is a extremely key side of regulation. Think about that, previous to launch, the designers determine that an algorithm utilized by a financial institution has a racial bias that, within the take a look at pattern, provides extra mortgages to white folks than to black folks. We have assessed the chance and may now return and repair the algorithm earlier than it is launched.

It’s comparatively simple to manage AI utilizing the identical form of course of that we use in different industries. It’s a query of danger mitigation, hurt discount and security earlier than the AI is launched to the general public.

As a substitute, what we’re seeing proper now’s Huge Tech doing these huge experiments on society and discovering out as they go alongside. Fb discovering out the issues because it goes alongside after which apologising for them.

Then it decides whether or not the affected inhabitants is value fixing it for, or not. If there’s genocide in Myanmar – not an enormous market – it isn’t going to do something about it, despite the fact that it is a crime towards humanity. Fb admitted that it had not thought of how its suggestion engine would work in a inhabitants with a historical past of non secular and ethnic violence. You possibly can require an organization to evaluate this earlier than releasing a brand new utility.

Obtain the most effective of European journalism straight to your inbox each Thursday

The issue with the concept of “transfer quick and break issues” [a motto popularised by Facebook CEO Mark Zuckerberg] is that it implicitly says, “I am entitled to harm you if my app is cool sufficient; I am entitled to sacrifice you to make an app.” We don’t settle for that in every other trade. Think about if we had pharmaceutical corporations saying, “We’ll get quicker leads to most cancers therapy when you do not regulate us, and we’ll do no matter experiments we wish”. Which may be true, however we do not need that form of experimentation, it will be disastrous.

We actually have to maintain that in thoughts as AI turns into extra vital and built-in into each sector. AI is the way forward for all the things: scientific analysis, aerospace, transportation, leisure, possibly even training. There are big issues that come up once we begin to combine unsafe applied sciences into different sectors. That is why we have to regulate AI.

That is the place regulatory seize is available in. Regulatory seize is when an trade tries to make the primary transfer in order that they’ll outline what needs to be regulated and what should not. The issue is that their proposals have a tendency to not handle harms as we speak,however moderately theoretical harms sooner or later sooner or later. When you’ve got a bunch of individuals saying, “We’re condemning humanity to extinction as a result of we have created the Terminator robotic, so regulate us”, what they’re saying is: “Regulate this downside that is not actual in the meanwhile.

Do not regulate this very actual downside that might stop me from releasing merchandise that comprise racist data, which may hurt susceptible folks, which may encourage folks to kill themselves. Do not regulate actual issues that pressure me to alter my product.”

In the event you’re talking from a privileged place – say, a wealthy white man someplace within the San Francisco Bay space – you’re most likely not desirous about how your app it’s going to harm an individual in Myanmar

After I take a look at the worldwide excursions of those tech moguls speaking about their grand imaginative and prescient of the long run, saying that AI goes to dominate humanity, and that subsequently we have to take regulation critically, it sounds honest. Truly, it sounds to me like they’re saying they’ll assist create a variety of guidelines for issues that they do not have to alter.

In the event you take a look at the proposals they launched just a few weeks in the past [in May 2023]: they need to create a regulatory framework round OpenAI the place governments would give OpenAI and some different huge tech corporations a de facto monopoly to develop merchandise, within the title of safety. This might even result in a regulatory framework that forestalls different researchers from utilizing AI.

The issue is that we’re not having a balanced dialog about what needs to be regulated within the first place. Is the issue the Terminator or the fragmentation of society? Is it disinformation? Is it incitement to violence? Is it racism? In the event you’re talking from a privileged place – as an instance, a wealthy white man someplace within the San Francisco Bay space – you are most likely not desirous about how your new app goes to harm an individual in Myanmar.

You are simply desirous about whether or not it hurts you. Racism would not damage you, political violence would not damage you. We’re asking the incorrect folks about harms. As a substitute, we have to contain extra folks from all over the world, notably from susceptible and marginalised communities who will expertise the harms of AI first. We have to discuss not about this huge concept of future harms, however about what harms are affecting them as we speak of their international locations, and what is going to stop that from occurring as we speak.

Lots of the issues that society will expertise with AI are virtually analogous to local weather change. You should buy an electrical automobile, you are able to do some recycling, however on the similar time it is a systemic downside and systemic issues require systemic approaches. So I’d counsel being energetic in demanding that leaders do one thing about it.

Spending political capital on this problem is an important factor that individuals can do – extra vital than becoming a member of Mastodon or deleting your cookies. What makes the development of AI so unfair is that it is designed by huge American corporations that do not essentially take into consideration the remainder of the world. The voices of small international locations matter much less to them than the voices of key swing states in the USA.

In the event you take a look at what’s been executed in Europe with GDPR, for instance, we’re not addressing the underlying design. We’re making a set of requirements for the way folks consent or do not consent, and on the finish of the day, everybody opts in.

That’s not the suitable framework for a regulatory method as a result of we’re treating it like a service, not like structure, and so we’re regulating safety by means of consent. It’s as if we regulated the safety of buildings by simply placing an indication on the door to say that by accepting the Phrases of Use you are consenting to the shoddy design and development of the constructing, and if it falls down it would not matter since you’ve consented.

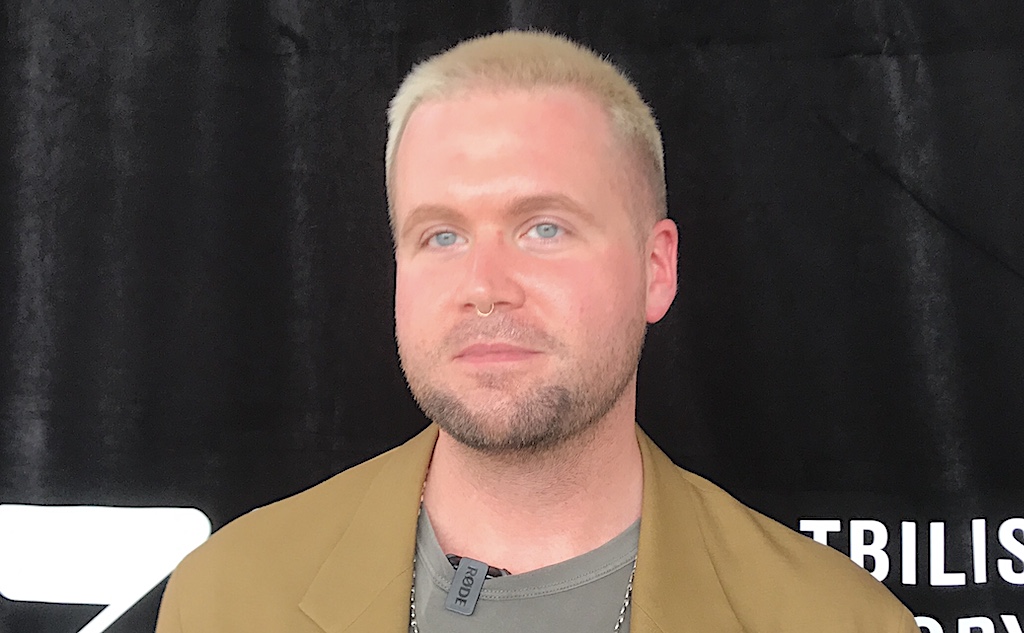

This textual content is a transcription of Christopher Wylie’s discuss on the ZEG storytelling pageant in Tbilisi, in June 2023. It was recorded by Gian-Paolo Accardo and edited for readability by Harry Bowden.

[ad_2]

Source link